Abstract:

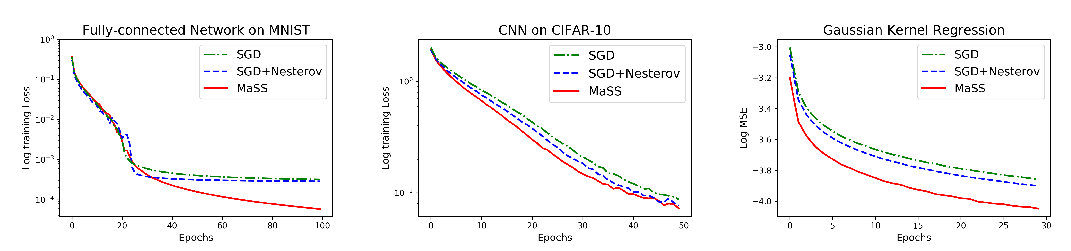

Mini-batch stochastic gradient methods (SGD) are state of the art for distributed training of deep neural networks.

Drastic increases in the mini-batch sizes have lead to key efficiency and scalability gains in recent years.

However, progress faces a major roadblock, as models trained with large batches often do not generalize well, i.e. they do not show good accuracy on new data.

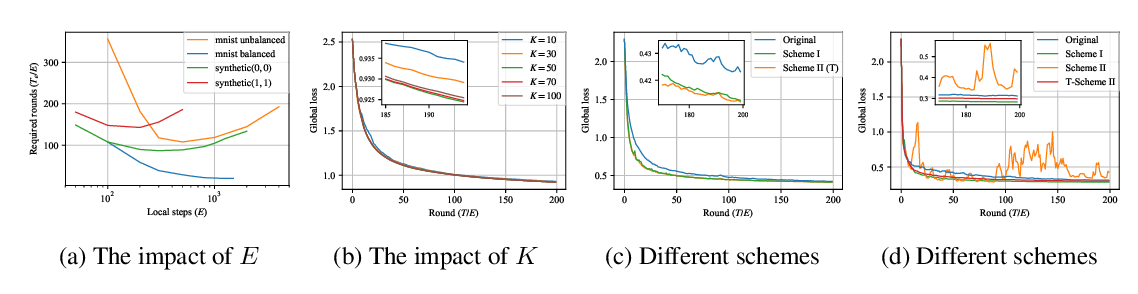

As a remedy, we propose a \emph{post-local} SGD and show that it significantly improves the generalization performance compared to large-batch training on standard benchmarks while enjoying the same efficiency (time-to-accuracy) and scalability. We further provide an extensive study of the communication efficiency vs. performance trade-offs associated with a host of \emph{local SGD} variants.